GPT-3 and avoiding the curse of de-skilling

Should Airplanes Fly Themselves?

The big tech news of last week was the release of OpenAI's GPT-3 tool. If you haven't heard the buzz, here is a Twitter feed showing demos of what the algorithm can do and the most cited must-read: GPT-3 is the biggest thing since bitcoin. And since we're in an era where nothing is more glamorous than grandstanding your understanding of both the underlying technology and its amazing potential to revolutionize the concept of everything, Sam Altman himself had to settle down his troops:

The GPT-3 hype is way too much. It’s impressive (thanks for the nice compliments!) but it still has serious weaknesses and sometimes makes very silly mistakes. AI is going to change the world, but GPT-3 is just a very early glimpse. We have a lot still to figure out.

— Sam Altman (@sama) July 19, 2020

It's impressive and likely has a long way to go to usability. Sort of like self-driving cars. So close, yet so far. There are a lot of takes out there. Here’s mine: As the world surges forward with automation, I often return to the concept of de-skilling and think about this article about the Air France crash.

Why do I fixate on this?

AI will change the world, but there is still much to figure out.

One of those things to figure out, totally unrelated to the development of the technology itself, is the human reaction to automation—particularly the phenomenon known as de-skilling.

In the article, a design engineer is talking about the design of the software system that now manages the flying of most planes:

Well, I’m going to cover the 98 percent of situations I can predict, and the pilots will have to cover the 2 percent I can’t predict.’ This poses a significant problem. I’m going to have them do something only 2 percent of the time. Look at the burden that places on them. First, they have to recognize that it’s time to intervene, when 98 percent of the time they’re not intervening. Then they’re expected to handle the 2 percent we couldn’t predict. What’s the data? How are we going to provide the training? How are we going to provide the supplementary information that will help them make the decisions? There is no easy answer. From the design point of view, we really worry about the tasks we ask them to do just occasionally.”

I said, “Like fly the airplane?”

Yes, that too. Once you put pilots on automation, their manual abilities degrade and their flight-path awareness is dulled: flying becomes a monitoring task, an abstraction on a screen, a mind-numbing wait for the next hotel. Nadine Sarter said that the process is known as de-skilling. It is particularly acute among long-haul pilots with high seniority, especially those swapping flying duties in augmented crews. On Air France 447, for instance, Captain Dubois had logged a respectable 346 hours over the previous six months but had made merely 15 takeoffs and 18 landings. Allowing a generous four minutes at the controls for each takeoff and landing, that meant that Dubois was directly manipulating the side-stick for at most only about four hours a year. The numbers for Bonin were close to the same, and for Robert they were smaller. For all three of them, most of their experience had consisted of sitting in a cockpit seat and watching the machine work.

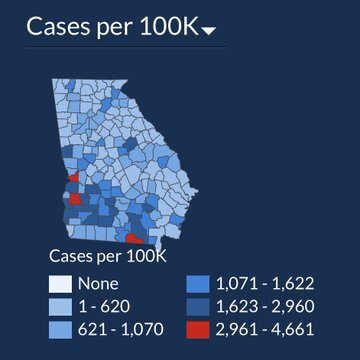

So GPT-3, which people are saying is close to being able to write an investment memo or an academic paper, will get us to save a lot of time. Drive a lot of efficiencies. It also has the real ability to de-skill human judgment. Why should you be concerned? Well, the average critical thinking and judgment capability is essential. Look no further than the rolling disaster in the US that is the Covid-19 response. While we have sleek tools like Teslas and GPT-3, take a look at these charts from the government of Georgia:

July 2, 2020

July 17, 2020

As pointed out on Twitter: In just 15 days, the total number of #COVID19 cases in Georgia is up 49%, but you wouldn’t know it from the state’s data visualization map of cases. The first map is on July 2. The second is July 17. Do you see a 50% case increase? Can you spot how it’s hiding?

Is this chart devious? Or incompetent? Having built a public sector consulting firm and worked with governments worldwide, my professional intuition says it's much more likely incompetence mixed with a tad of 'don't make the boss look bad.’

The chattering crowd keeps asking: Why is everyone in America so stupid? Can't they see the data is off? Can't they have a good sense of risk? Well, as we are automating more and more data collection, analytics, and interpretation, you see a bifurcation in skill development.

Skill bifurcation — or inequality — has consequences. What do I mean?

Some people are forced to think, write, and reason every day. That's what I spend most of the day doing. However, suppose you are in an operational role or one where your job skills depend on pushing papers, keeping people happy, and not actively interpreting data and analysis. In that case, you are often not being forced to think. And so, you may not believe a chart like the one above is misleading. You may think it's great. It shows the hot zone countries. It has ranges. It's a proper heat map. Job done. Perhaps even well-done.

Strong excel skills were a competitive advantage when I started at McKinsey in 2008. You could build models, delight clients, and explain data insights. Now, the role of the Business Analyst is being automated away. Now powered by AI & ML, SaaS products can do 95% of the annoying part of the job, leaving more to the critical task of "What are the real insights here? What are we trying to say? What are we doing as a result?"

Answering those questions is challenging work. It requires high-functioning skills. That's a muscle you have to build and develop with intense training. And hours upon hours of it. How many people are getting that intense training? Particularly when the repetition of opportunities to use judgment on small stakes and being allowed to make mistakes and correct them become fewer and farther between.

So you can celebrate and say, ‘Well, isn't this great? We can now make it easier to have awesome graphs and charts that aren't misleading because technology can ensure it is done well.’ But, simultaneously, we will have a decreasing population that could understand the logic and intuition behind those graphs. They couldn't produce them themselves. They couldn't explain the judgment for why they are produced that way.

This all echoes how your average pilot used to have thousands of flight hours of training and now has 2.5 minutes of take-off and 2.5 minutes of landing, which are still mostly automated. So, do they know how to fly? Could they take over the computer in an emergency? For most, probably not. A pilot with a four-seat prop plane in Oaxaca may be a better bet.

As the AI wizards figure out GPT-4, let’s ensure we live in a world where the average person is not 'de-skilled' in writing, using judgment, and doing the hard work that a computer could do, but they should learn to do themselves first.

Critical thinking and independent judgment are public goods we desperately need to live in a functioning democracy and human society. We cannot afford to be de-skilled.